Have you ever been stumped by a bug? Overwhelmed? Did it take hours, days, or weeks to solve? How did you finally manage to get yourself unstuck? Did you just get lucky, or was it skill?

I certainly didn’t always follow a formal debugging process. My first programming gig was writing Perl scripts to process yeast genomes. I would waste days fixing each bug and performance problem. Why? I would randomly guess a fix and then wait hours for the result. It wasn’t productive and it wasn’t fun. To spare people the same pain, I’m sharing the process I’ve refined over the years, and that we embrace at MOKA Analytics.

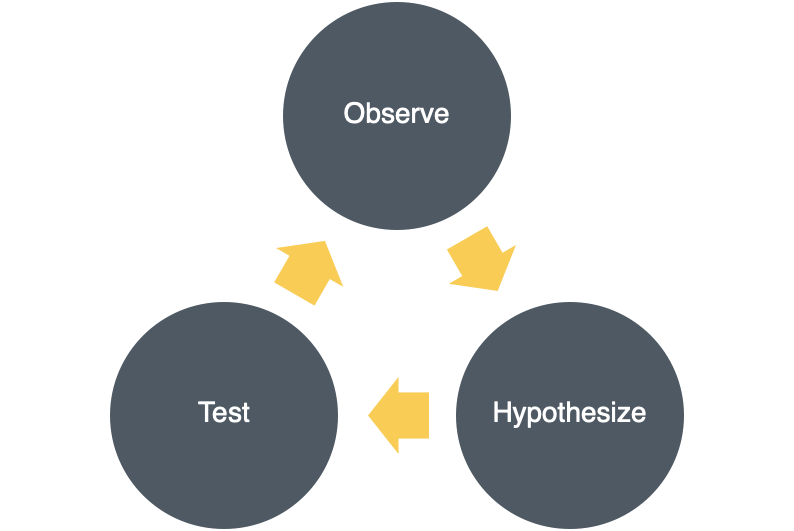

The debugging process is similar to the scientific method, cycling through three steps:

- Observe: catalog what you know and don’t know

- Hypothesize: brainstorm causes and rank by likelihood

- Test: falsify hypotheses by collecting new evidence

These days, I’ll often follow these steps in my heads and in parallel. However, when the going gets tough, I’ve found it critical to follow the steps in order and write everything down. At MOKA, it’s not uncommon to see the outputs of the debugging process scribbled all over our whiteboards.

Now let’s dig into each step of the observe-hypothesize-test cycle.

Observe

The first step is to catalog what you know and what you don’t know. Start by cataloging the headline information: the symptoms (error messages, stack trace, etc.), the inputs (user input, data set, etc.), and the environment (software version, settings, etc.). When debugging exceptions, I’ll use the debugger to step through the code near where the exception was raised to observe the code path and values.

Pro-Tip: learn to use your IDE’s debugger. If you don’t have an IDE with a debugger, switch IDEs. If your company won’t pay for an IDE with a debugger, switch jobs.

Hypothesize

The next step is to brainstorm potential causes and rank them by likelihood. Don’t be afraid to get creative — your goal here is to ensure the actual cause eventually ends up in the list of hypotheses. The percentage of hypotheses that turn out to be wrong doesn’t matter. It’s also OK to write down hypotheses already falsified by the evidence and then cross them out. Incorrect hypotheses can spark ideas, and every hypothesis you eliminate is progress toward your goal.

Once you’ve recorded your hypotheses, quickly rank them by likelihood. Use your observation as well as your own experience. Some of my favorite heuristics are: past bugs, areas of complexity, recent changes, test coverage, and who wrote the code (don’t tell my co-workers I said this last one).

Pro-Tip: voraciously expand your model of the world to remove unknown unknowns. Get acquainted with tricky areas, e.g., multi-threading, race conditions, file encodings, aliasing, unicode, caching, equality semantics, differences between OSs, etc. You don’t need to remember the details off the top of your head, you just need to know these problem areas exist and can cause bugs.

Test

Finally, you’ll eliminate hypotheses by running tests to collect new evidence. Consider your most likely hypotheses. What tests could you run to falsify them? One approach is to ask: “if this hypothesis were true, what would I expect to observe if I made changes to the inputs or environment?” While coming up with tests, you’ll most likely find that you need to refine your hypotheses to be more specific.

For each test, estimate the payoff and effort. The payoff determined by which hypotheses the test would falsify (i.e., information gain). The effort is how long it’d take you to run the test.Run the test that provides the most “bang for the buck” — the best tradeoff of payoff vs. effort.

Pro-Tip: for a given test, there’s often a quicker test that falsifies a weaker version of a hypothesis. These often provide more bang for your buck. For example, what would you learn by testing on a small subset of the input data?

Putting It All Together

The steps of the debugging process form a cycle. Each time you perform a test, observe the results and update your hypotheses. For most problems, we’ve found we only need two or three times through the cycle to diagnose the root cause.

Final Pro-Tip: as you follow this process, patterns will emerge in observations, hypotheses, and tests. To support these patterns, invest in logging, diagnostics, and test infrastructure. Your future self will thank you for it.